Correlation and Key Finding

Correlation

A correlation tests the strength of the relationship between two variables. Several types of correlation procedures exist, each with their own resulting coefficient based on their respective definitions. However, all correlation coefficients return values in the range from -1 to +1, where +1 is perfect correlation in the same direction, -1 is perfect correlation in opposite directions, and 0 means absolutely no relationship.

Let's look at some athlete data to do some examples:

We will start learning how to use the pandas library. For now, you can just follow along with the pseudo-code.

import pandas as pd #import pandas library

import matplotlib.pyplot as plt

from scipy.io.wavfile import read

from scipy import signal

import numpy as np

from IPython.display import Audio

#Those having librosa issues will need help ASAP!!

from librosa import feature, load

df = pd.read_csv('../Datasets/ais.csv') # function to read table data (i.e., comma separated value or .csv files)

df.head() # show first 5 rows of the table

The above table shows statistical data for a set of athletes. While the columns are not labeled in a way that we can understand, there are several columns that are pretty easy to guess: "ht" = height, "wt" = weight, "bmi" is body mass index, "pcBfat" is percent body fat, etc.

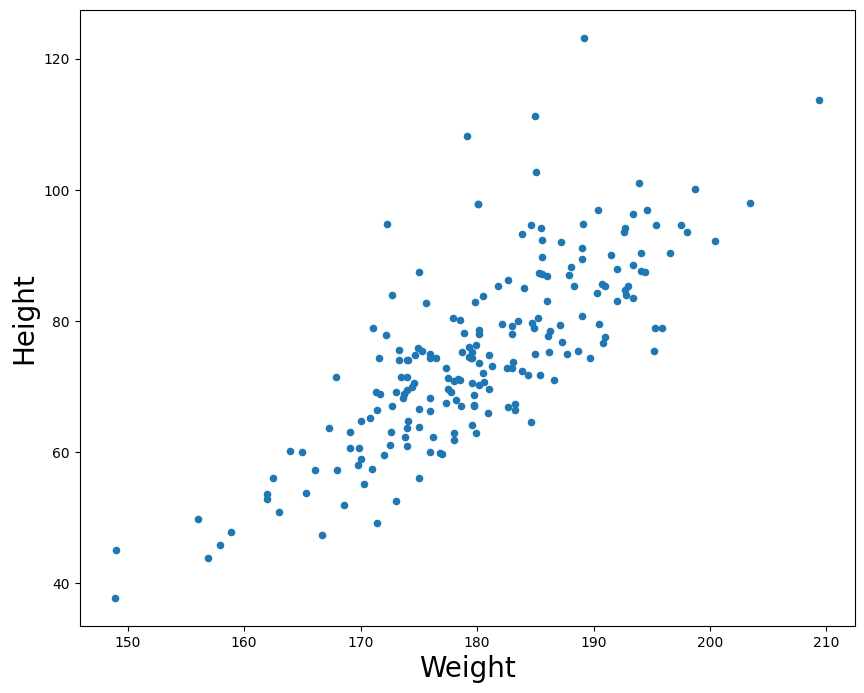

Perhaps we would want to know how tightly correlated the values for weight and height are. A good way to visualize this is by graphing first. We'll make use of the function pandas.DataFrame.plot.scatter

p = df.plot.scatter(x ='ht', y = 'wt', figsize=(10,8))

p.set_xlabel('Weight', fontsize=20)

p.set_ylabel('Height', fontsize=20)

For every value in height (x-axis) we plot the athlete's respective weight on the y-axis.

There are two types of correlations: positive and negative. When two factors are positively correlated, it means that as one variable increases, the other also increases. In this example, we can see that as a person's height in cm increases, their weight also tends to increase. If there was a perfect correlation, we could draw a straight line and all points would sit exactly on the line. This is because a perfect correlation means that for every increase in some value on the X axis, we have an exact predicted value of change on the Y axis.

If the reverse relationship is true, then we call it a negative correlation (one factor goes up as the other systematically goes down.) Note a perfect negative correlation still results in a straight line. The line would simply descend instead of ascend. The point is that the relationship between two variables can be perfectly predicted either way.

In both cases, when there is a strong correlation (either positive OR negative), movement along one variable strongly predicts movement along another.

Pearson's Product-Moment Correlation

The most common measure of correlation is Pearson's product-moment correlation, which is commonly referred to simply as "the correlation", "the correlation coefficient", or just the letter $r$ (always written in italics).

- A correlation coefficient (r value) of 1 indicates a perfect positive correlation.

- A correlation of -1 indicates a perfect negative correlation.

- A correlation of 0 indicates that there is no relationship between the two variables.

Therefore, absolute values between 0 and 1 denote the strength of the correlation, and the direction of the value (positive or negative) indicate the direction of the correlation or relationship between the variables.

numpy.corrcoef is a function for computing the Pearson product-moment correlation coefficient. It actually returns a matrix, which represents the coefficient for all possible combinations of the variables. For example, if passed two arrays, $X$ and $Y$, it will output four values, representing:

$Cov( X, X ), Cov( X, Y )$

$Cov( Y, X ), Cov( Y, Y )$

("Cov" stands for covariance).

Since what we care about is the relation between $X$ and $Y$, we can ignore the $X-X$ and $Y-Y$ comparisons. The other two vaules will be the same.

The pandas library also has a built-in function if you are already using dataframe structures (like our example above which we will soon go over) pandas.DataFrame.corr which does the same thing as the numpy function.

#Compute the correlation coefficient between the athletes' height and weight

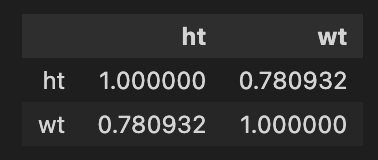

df[['ht','wt']].corr() #subset the dataframe to only look at height and weight columns; pass to correlation function

We can look at two other (nonsensical) features to examine any possible correlation

df.plot.scatter(x ='ssf', y = 'lbm', figsize=(10,8))

df[['ssf','lbm']].corr()

The above represents an example of a weak negative correlation between the factors ssf(?) and lbm (lean body mass?) with an $r$ value of -0.2 (common practice is to round to 1 or 2 decimal places).

Key Finding

I'm switching to using Librosa to read in the files, since if working with 'real' audio files it has built-in parameters that make it easier to include in a data-processing pipeline. Namely, the ability to downsample and convert to mono at the time of read-in.

Note: Librosa's load outputs a tuple that is backwards from scipy.io.wavfile.read, that is, it outputs (data, sr)

# load default is sr=22050, and convert to mono = True

(x, fs) = load('../audio/JimHendrix_HeyJoe.wav')

xn = x/np.abs(x.max()) #normalize

t = np.arange(0, len(xn)/(fs/2), 1/(fs/2))/60 #time in minutes

It's common practice when examining spectral content for large quantities of analysis to downsample by a factor of 1 (so go from 44.1 to 22.05 kHz). This reduces the overall amount of processing by 1/2. In this case, the Nyquist will be ~11kHz which still has most music data we need and doesn't impact overall results much. Note that librosa has it's own load function which does this downsampling automatically. (More on that later.)

#Sample song

# %matplotlib inline

# %config InlineBackend.figure_format = 'svg'

# plt.figure(figsize=(8,4))

plt.plot(t, xn)

plt.ylabel('Amplitude')

plt.xlabel('Time in minutes')

Audio(xn, rate=22050)

Get chromagram for first 30 seconds of audio

chromagram = feature.chroma_stft(xn[:661500].astype(float), n_fft=1024) #sr*15 = first fifteen seconds worth of samples

chromagram

array([[0.88719395, 0.63097606, 0.69450595, ..., 0.16595892, 0.30668777,

0.20207921],

[0.76095399, 0.79009987, 0.71358081, ..., 0.26933158, 0.25786502,

0.19942374],

[1. , 1. , 1. , ..., 0.5205042 , 0.37465961,

0.37350725],

...,

[0.56115379, 0.53844488, 0.47591056, ..., 0.14619396, 0.363613 ,

0.12324952],

[0.68541762, 0.56999751, 0.95144422, ..., 0.12136965, 0.26104676,

0.1139758 ],

[0.84691904, 0.66600466, 0.90938028, ..., 0.13269604, 0.36900415,

0.23517866]])

chromagram.shape

(12, 1292)

Recall that the chromagram will output a multidimensional array: here with 1292 time windows each with a vector of 12 values (one for the magnitude of each pitch class). The chromagram output vector is arranged from 0 to 12 where 0='C'

Key Detection

One major use for chromagram data is in automatic key detection tasks. The most common model is that chromagrams are computed from some file or excerpt, and each chromagram is evaluated against some kind of pitch class distribution (usually also called "key profiles" or "pitch class profiles" or "theoretical profiles" for all 24 musical (major-minor) keys. (The one below has averaged all major and minor keys respectively and represents scale-degree rather than chroma).

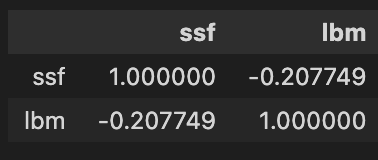

Krumhansl-Kessler Key Profiles (i.e., "KK profiles")

Krumhansl and Kessler did a famous study in the 80s, and their profiles are still one of the most widely-used today. They are widely used because they are one of the only set of values based on human perception.

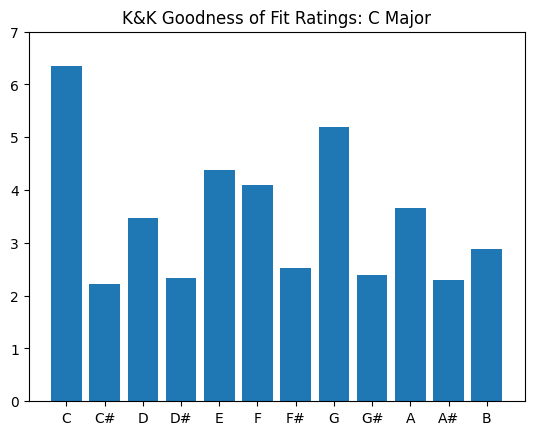

The basic idea was that, over several experiments, they asked people to rate how well a particular note "fit" following a particular cadential chord progression. The "profile" then, is the averaged ratings by chromatic scale degree (see below).

These profiles have been shown to be highly correlated with actual pitch class distributions for classical pieces. (I.e., if we count up all the notes in a Mozart sonata in C major, and report the distribution of scale-degrees as a proportion, the shape would look a lot like the profile on the left).

#profile values by pitch class

#CMajor:

major_0 = np.array([6.35,2.23,3.48,2.33,4.38,4.09,2.52,5.19,2.39,3.66,2.29,2.88]) #values: C,C#,D,D#,...B

#CMinor:

minor_0 = np.array([6.33,2.68,3.52,5.38,2.60,3.53,2.54,4.75,3.98,2.69,3.34,3.17]) #values: C,C#,D,D#,...B

We can plot each of these as a histogram:

scaledegs = ['C','C#','D','D#','E','F','F#','G','G#','A','A#','B']

plt.bar(scaledegs, major_0)

plt.ylim((0,7))

plt.title('K&K Goodness of Fit Ratings: C Major')

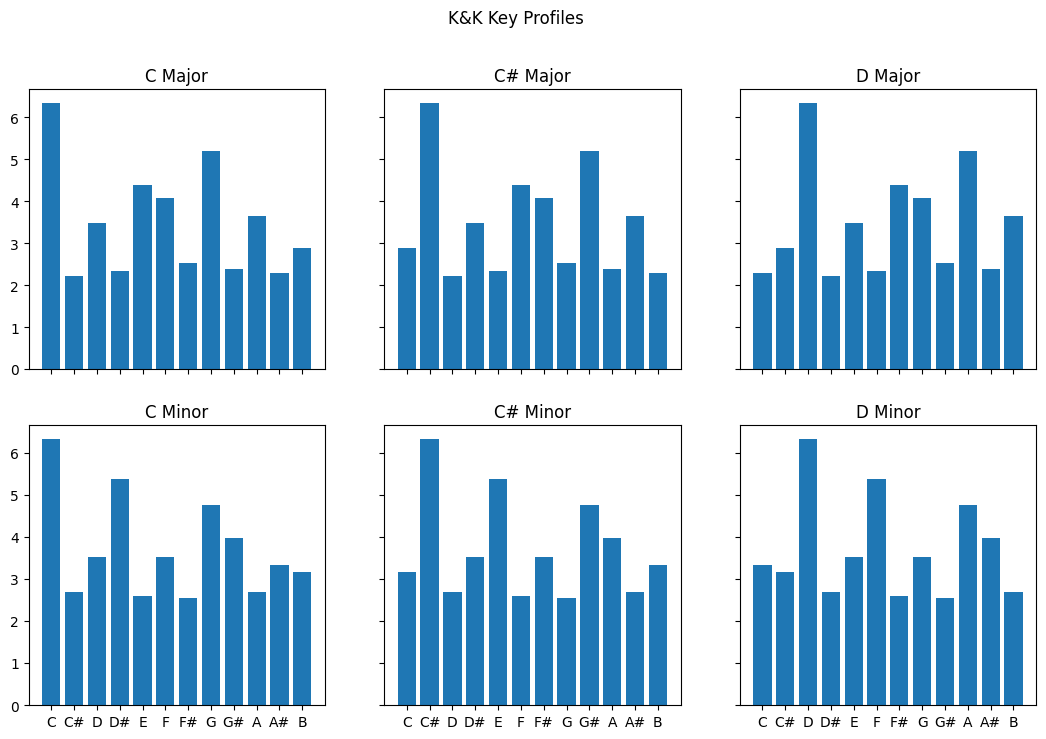

To get the "pitch class profile" values for every major key, we need to shift (or rotate) all the chromatic values by 1 semitone. (E.g., C# major will have "do" equal to "C#")

arraysDict = {}

for i in range(0,12):

arraysDict['major_{0}'.format(i)] = np.roll(major_0,i)#iteratively create each label while rotating

arraysDict['minor_{0}'.format(i)] = np.roll(minor_0,i)#np.roll is a handy shifting/rotating function

arraysDict # each number represents a semitone shift from 0 or C major / minor

{'major_0': array([6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29,

2.88]),

'minor_0': array([6.33, 2.68, 3.52, 5.38, 2.6 , 3.53, 2.54, 4.75, 3.98, 2.69, 3.34,

3.17]),

'major_1': array([2.88, 6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66,

2.29]),

'minor_1': array([3.17, 6.33, 2.68, 3.52, 5.38, 2.6 , 3.53, 2.54, 4.75, 3.98, 2.69,

3.34]),

'major_2': array([2.29, 2.88, 6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39,

3.66]),

'minor_2': array([3.34, 3.17, 6.33, 2.68, 3.52, 5.38, 2.6 , 3.53, 2.54, 4.75, 3.98,

2.69]),

'major_3': array([3.66, 2.29, 2.88, 6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19,

2.39]),

'minor_3': array([2.69, 3.34, 3.17, 6.33, 2.68, 3.52, 5.38, 2.6 , 3.53, 2.54, 4.75,

3.98]),

'major_4': array([2.39, 3.66, 2.29, 2.88, 6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52,

5.19]),

'minor_4': array([3.98, 2.69, 3.34, 3.17, 6.33, 2.68, 3.52, 5.38, 2.6 , 3.53, 2.54,

4.75]),

'major_5': array([5.19, 2.39, 3.66, 2.29, 2.88, 6.35, 2.23, 3.48, 2.33, 4.38, 4.09,

2.52]),

'minor_5': array([4.75, 3.98, 2.69, 3.34, 3.17, 6.33, 2.68, 3.52, 5.38, 2.6 , 3.53,

2.54]),

'major_6': array([2.52, 5.19, 2.39, 3.66, 2.29, 2.88, 6.35, 2.23, 3.48, 2.33, 4.38,

4.09]),

'minor_6': array([2.54, 4.75, 3.98, 2.69, 3.34, 3.17, 6.33, 2.68, 3.52, 5.38, 2.6 ,

3.53]),

'major_7': array([4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88, 6.35, 2.23, 3.48, 2.33,

4.38]),

'minor_7': array([3.53, 2.54, 4.75, 3.98, 2.69, 3.34, 3.17, 6.33, 2.68, 3.52, 5.38,

2.6 ]),

'major_8': array([4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88, 6.35, 2.23, 3.48,

2.33]),

'minor_8': array([2.6 , 3.53, 2.54, 4.75, 3.98, 2.69, 3.34, 3.17, 6.33, 2.68, 3.52,

5.38]),

'major_9': array([2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88, 6.35, 2.23,

3.48]),

'minor_9': array([5.38, 2.6 , 3.53, 2.54, 4.75, 3.98, 2.69, 3.34, 3.17, 6.33, 2.68,

3.52]),

'major_10': array([3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88, 6.35,

2.23]),

'minor_10': array([3.52, 5.38, 2.6 , 3.53, 2.54, 4.75, 3.98, 2.69, 3.34, 3.17, 6.33,

2.68]),

'major_11': array([2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88,

6.35]),

'minor_11': array([2.68, 3.52, 5.38, 2.6 , 3.53, 2.54, 4.75, 3.98, 2.69, 3.34, 3.17,

6.33])}

Let's see a plot of a few so we see what we are doing:

#For clarity and demonstration purposes:

c_sharp = arraysDict['major_1'] #grab the 'major_1' array from the dictionary...

d = arraysDict['major_2']

c_minor = arraysDict['minor_0']

cs_minor = arraysDict['minor_1']

d_minor = arraysDict['minor_2']

fig, ax = plt.subplots(2, 3, sharex='col', sharey='row', figsize=(13,8))# axes are in a two-dimensional array, indexed by [row, col]

ax[0,0].bar(scaledegs, major_0)

ax[0,0].set_title("C Major")

ax[0,1].bar(scaledegs, c_sharp)

ax[0,1].set_title("C# Major")

ax[0,2].bar(scaledegs, d)

ax[0,2].set_title("D Major")

ax[1,0].bar(scaledegs, c_minor)

ax[1,0].set_title("C Minor")

ax[1,1].bar(scaledegs, cs_minor)

ax[1,1].set_title("C# Minor")

ax[1,2].bar(scaledegs, d_minor)

ax[1,2].set_title("D Minor")

fig.suptitle('K&K Key Profiles')

By creating a separate array for each possible major scale, we can then take the values we have computed from our chromagrams and calculate correlations with each of the 24 profiles to estimate which one is the best match.

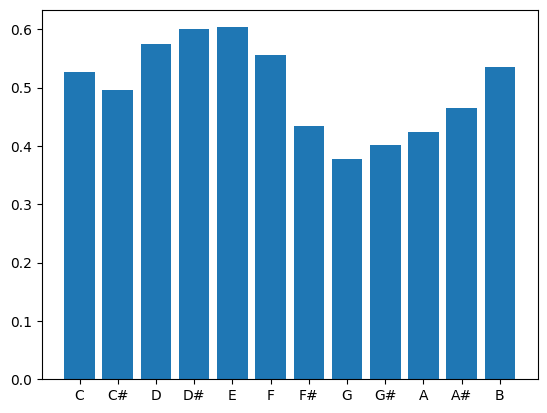

Let's start with an example where we simply take the mean across all windows:

chromagram.shape

(12, 1292)

avg = chromagram.mean(axis=1) # Take average chromagram values for entire duration of clip

avg

array([0.52692458, 0.49668143, 0.57444444, 0.60058 , 0.60336632,

0.55506144, 0.43473962, 0.37813515, 0.40101331, 0.42385475,

0.46426206, 0.53536389])

#Let's plot this

plt.bar(scaledegs, avg)

#Compute correlation between these values and each possible vector from the array dictionary.

for key, value in arraysDict.items():

r = np.corrcoef([avg,value])

print(key,r[0][1])

major_0 0.053231553733300525

minor_0 0.09449950609494047

major_1 -0.21851384441650823

minor_1 0.05187944555177345

major_2 -0.11686680103746123

minor_2 0.2225591480277098

major_3 0.008960233285577738

minor_3 0.18350198400208498

major_4 0.13999186006990066

minor_4 0.1577643990999423

major_5 0.08784163817363728

minor_5 -0.02560566546205075

major_6 -0.1864434954860319

minor_6 -0.2968163922432239

major_7 -0.11142081529743578

minor_7 -0.27736239638080856

major_8 -0.04866503188053358

minor_8 -0.055181925712627894

major_9 0.047700267639869114

minor_9 0.033615910545926664

major_10 0.1655814614357695

minor_10 -0.12202704790513115

major_11 0.17860297377991594

minor_11 0.033173034381464794

The highest positive correlation is .25 for minor_2 which is D minor, followed by major_10 which is Bb major at .19. The problem is, humans don't really agree on the key for 'Hey Joe'. The song's chord progression follows an ascending circle-of-fifths using only major harmonies: CM - GM - DM - AM -EM. Because it tends to cadence on E, people most commonly say the piece is in E major.

The correlation with E major is 0.13, which is the fourth-best guess according to this simple model (E minor gets score of .15).

Another try

(x, fs) = load('../audio/eleanorrigby.wav', sr=22050)

xnorm = x/np.abs(x.max())

from IPython.display import Audio

Audio(xnorm, rate=fs)

chromagram = feature.chroma_stft(xnorm[:661500], n_fft=2048) #first 15 seconds only

avg = chromagram.mean(axis=1)# Take average chromagram values for entire duration of clip (i.e. across rows)

for key, value in arraysDict.items():

r = np.corrcoef([avg,value])

print(key,r[0][1])

major_0 0.480344863421704

minor_0 0.12062872184563107

major_1 -0.578761655956151

minor_1 -0.010491913468391647

major_2 -0.03531130771993445

minor_2 -0.26250404299627084

major_3 -0.1438796698760917

minor_3 -0.27208823777170166

major_4 0.5194485522221685

minor_4 0.9247004462167714

major_5 -0.12550908898854368

minor_5 -0.1904134473852289

major_6 -0.3787455243388288

minor_6 -0.4037272817151577

major_7 0.515012468854908

minor_7 -0.025707354686128628

major_8 -0.30574409131323305

minor_8 0.21753027065436037

major_9 -0.012264439174864014

minor_9 0.21241404441245604

major_10 -0.2756164767525056

minor_10 -0.59611699776902

major_11 0.3410263696213718

minor_11 0.2857757926626805

This piece has the highest positive correlation with E minor at .92, followed by E major at .51, followed by G major at .51. Most people agree this piece is in E minor (though given the melody, it might better be described as in E dorian, which is the same as the natural minor with a raised 6th! Yet another problem for popular music...)

Testing different systems

It is possible that we might get better results if we examined the last thirty seconds of a song (this would be especially true for classical music).

An alternative system might break down the song into several slices of, say, 10 to 30 seconds each and compute the average of the chromagram for that duration of time. When there might be a "close tie" for first place, doing it this way would allow the comparison of multiple arrays of r values, and you could then examine the global average in comparison with the highest positive values across each array to examine how many times the highest average value of each time frame aligned with the global average.

In our activity we will try a few different approaches and compare output.

Testing different possible key profiles

Here we examined only the relation between the chromagram data and the Krumhansl-Kessler profiles. These are strongly biased towards distributions in Western classical music. However, they are the only key profile values in use that are *psychologically collected*. There are other profiles based on the distributions of notes in various data collections that have been tested and may work slightly better on popular music. For instance, a profile by David Temperley has been known to do better for pop music. There are many others, for instance...

from music21 import analysis

a = analysis.discrete.KrumhanslSchmuckler() #These are the same ones we are already using.

a.getWeights(weightType='major')

[6.35, 2.23, 3.48, 2.33, 4.38, 4.09, 2.52, 5.19, 2.39, 3.66, 2.29, 2.88]

a = analysis.discrete.SimpleWeights() #Super simple weighting based on 3 categories: In triad (2), in diatonic collection (1) or chromatic (0)

a.getWeights(weightType='major')

[2, 0, 1, 0, 1, 1, 0, 2, 0, 1, 0, 1]

a = analysis.discrete.TemperleyKostkaPayne()

a.getWeights(weightType='major')

[0.748, 0.06, 0.488, 0.082, 0.67, 0.46, 0.096, 0.715, 0.104, 0.366, 0.057, 0.4]

a = analysis.discrete.AardenEssen()

a.getWeights(weightType='major')

[17.7661,

0.145624,

14.9265,

0.160186,

19.8049,

11.3587,

0.291248,

22.062,

0.145624,

8.15494,

0.232998,

4.95122]

a = analysis.discrete.BellmanBudge()

a.getWeights(weightType='major')

[16.8, 0.86, 12.95, 1.41, 13.49, 11.93, 1.25, 20.28, 1.8, 8.04, 0.62, 10.57]

Challenges

There are many reasons that automatic key detection is a non-trivial task for a computer. The biggest challenge for automatic key detection systems is that it can even be a challenging task for humans! For instance, when a piece has changes of the key (modulations) and/or many notes that are outside of the theoretical note distribution of the key, the order and timing of notes becomes very important.

Currently key detection works best for popular music that stays (mostly) within a single key for the duration of the piece, or music where the largest proportion of the song stays in one key. They also tend to work slightly better with a larger window for finer spectral resolution (e.g., fft size =~ 4096-8192) and a fairly large overlap of 50% or higher.

Chromagrams are typically generated with A=440 tuning, and so any recording that deviates from this will need to be adjusted. (See Librosa package details for how to do that!)

A final alternative approach might be to collect many key profiles, evaluate the song chromagram against every possible profile, and look at the predictions from each one, and assuming at least two models converge (agree), take that answer.

If we wanted to compare different key profiles, we have to ensure to normalize each one first!